Problem

$2^{15} = 32768$ and the sum of its digits is $3 + 2 + 7 + 6 + 8 = 26$.

What is the sum of the digits of the number $2^{1000}$?

$2^{15} = 32768$ and the sum of its digits is $3 + 2 + 7 + 6 + 8 = 26$.

What is the sum of the digits of the number $2^{1000}$?

Starting in the top left corner of a 2x2 grid, there are 6 routes (without backtracking) to the bottom right corner.

How many routes are there through a 20x20 grid?

Artificial Neural Networks have gained attention during the recent years, driven by advances in deep learning. But what is an Artificial Neural Network and what is it made of?

Meet the perceptron.

In this article we’ll have a quick look at artificial neural networks in general, then we examine a single neuron, and finally (this is the coding part) we take the most basic version of an artificial neuron, the perceptron, and make it classify points on a plane.

But first, let me introduce the topic.

Have you ever wondered why there are tasks that are dead simple for any human but incredibly difficult for computers? Artificial neural networks (short: ANN’s) were inspired by the central nervous system of humans. Like their biological counterpart, ANN’s are built upon simple signal processing elements that are connected together into a large mesh.

ANN’s have been successfully applied to a number of problem domains:

Agreed, this sounds a bit abstract, so let’s look at some real-world applications. Neural networks can -

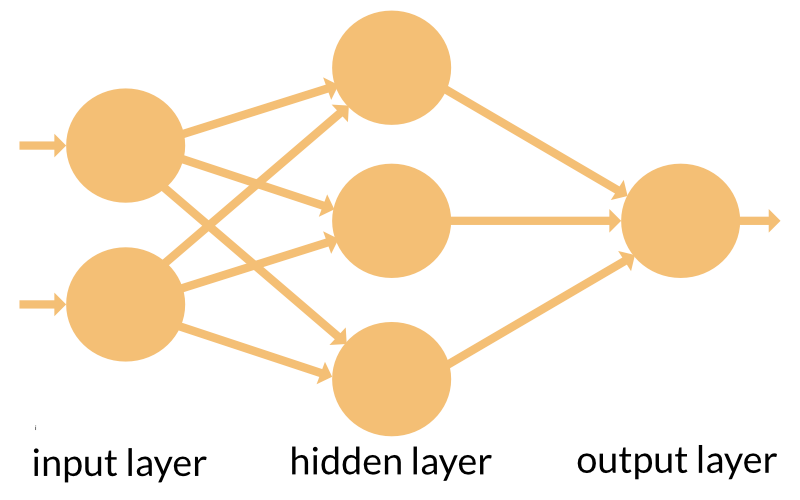

There are many ways of knitting the nodes of a neural network together, and each way results in a more or less complex behavior. Possibly the simplest of all topologies is the feed-forward network. Signals flow in one direction only; there is never any loop in the signal paths.

Typically, ANN’s have a layered structure. The input layer picks up the input signals and passes them on to the next layer, the so-called ‘hidden’ layer. (Actually, there may be more than one hidden layer in a neural network.) Last comes the output layer that delivers the result.

Unlike traditional algorithms, neural networks cannot be ‘programmed’ or ‘configured’ to work in the intended way. Just like human brains, they have to learn how to accomplish a task. Roughly speaking, there are three learning strategies:

The easiest way. Can be used if a (large enough) set of test data with known results exists. Then the learning goes like this: Process one dataset. Compare the output against the known result. Adjust the network and repeat. This is the learning strategy we’ll use here.

Useful if no test data is readily available, and if it is possible to derive some kind of cost function from the desired behavior. The cost function tells the neural network how much it is off the target. The network then can adjust its parameters on the fly while working on the real data.

The ‘carrot and stick’ method. Can be used if the neural network generates continuous action. Follow the carrot in front of your nose! If you go the wrong way - ouch. Over time, the network learns to prefer the right kind of action and to avoid the wrong one.

Ok, now we know a bit about the nature of artificial neural networks, but what exactly are they made of? What do we see if we open the cover and peek inside?

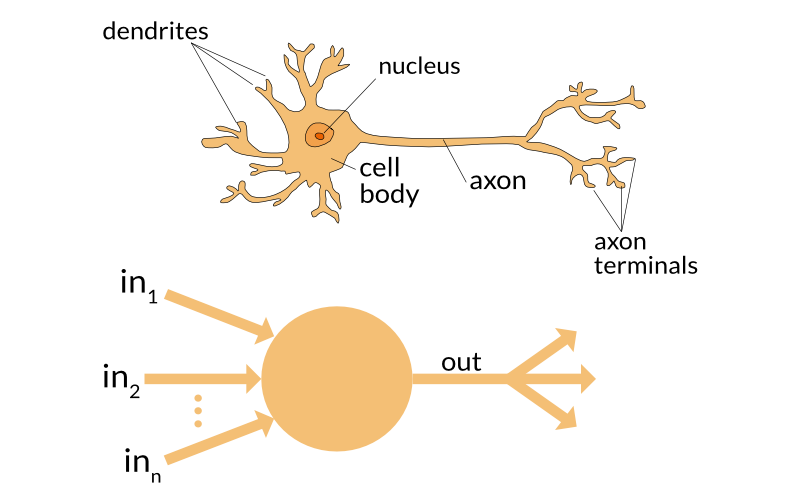

The very basic ingredient of any artificial neural network is the artificial neuron. They are not only named after their biological counterparts but also are modeled after the behavior of the neurons in our brain.

Just like a biological neuron has dendrites to receive signals, a cell body to process them, and an axon to send signals out to other neurons, the artificial neuron has a number of input channels, a processing stage, and one output that can fan out to multiple other artificial neurons.

When a signal comes in, it gets multiplied by a weight value that is assigned to this particular input. That is, if a neuron has three inputs, then it has three weights that can be adjusted individually. During the learning phase, the neural network can adjust the weights based on the error of the last test result.

In the next step, the modified input signals are summed up to a single value. In this step, an offset is also added to the sum. This offset is called bias. The neural network also adjusts the bias during the learning phase.

This is where the magic happens! At the start, all the neurons have random weights and random biases. After each learning iteration, weights and biases are gradually shifted so that the next result is a bit closer to the desired output. This way, the neural network gradually moves towards a state where the desired patterns are “learned”.

Finally, the result of the neuron’s calculation is turned into an output signal. This is done by feeding the result to an activation function (also called transfer function).

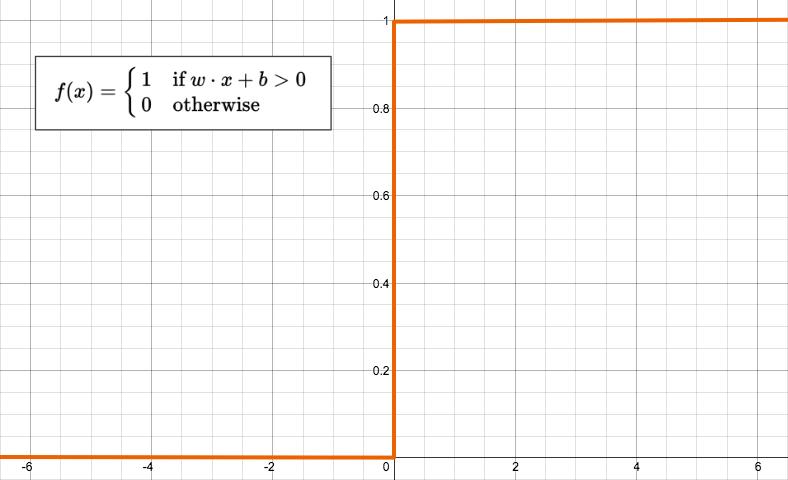

The most basic form of an activation function is a simple binary function that has only two possible results.

Despite looking so simple, the function has a quite elaborate name: The Heaviside Step function. This function returns 1 if the input is positive or zero, and 0 for any negative input. A neuron whose activation function is a function like this is called a perceptron.

If you think about it, it looks as if the perceptron consumes a lot of information for very little output - just 0 or 1. How could this ever be useful on its own?

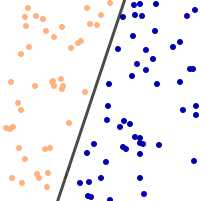

There is indeed a class of problems that a single perceptron can solve. Consider the input vector as the coordinates of a point. For a vector with n elements, this point would live in an n-dimensional space. To make life (and the code below) easier, let’s assume a two-dimensional plane. Like a sheet of paper.

Further consider that we draw a number of random points on this plane, and we separate them into two sets by drawing a straight line across the paper:

This line divides the points into two sets, one above and one below the line. (The two sets are then called linearly separable.)

A single perceptron, as bare and simple as it might appear, is able to learn where this line is, and when it finished learning, it can tell whether a given point is above or below that line.

Imagine that: A single perceptron already can learn how to classify points!

Let’s jump right into coding, to see how.

1 | package main |

Play with the number of training iterations!

Change the learning rate to 0.01, 0.2, 0.0001, 0.5, 1,… while keeping the training iterations constant. Do you see the accuracy change?

The following iterative sequence is defined for the set of positive integers:

$$n \rightarrow

\begin{cases}

\tfrac{n}{2} & \text{if } n \text{ is even} \

3n+1 & \text{if } n \text{ is odd}

\end{cases}$$

Using the rule above and starting with 13, we generate the following sequence:

$$13, 40, 20, 10, 5, 16, 8, 4, 2, 1$$

It can be seen that this sequence (starting at 13 and finishing at 1) contains 10

terms. Although it has not been proved yet (Collatz Problem), it is thought that all

starting numbers finish at 1.

Which starting number, under one million, produces the longest chain?

The sequence of triangle numbers is generated by adding

the natural numbers. So the 7th triangle number would be

$1 + 2 + 3 + 4 + 5 + 6 + 7 = 28$. The first ten terms would be:

$$1, 3, 6, 10, 15, 21, 28, 36, 45, 55, …$$

Let us list the factors of the first seven triangle numbers:

1: 1

3: 1,3

6: 1,2,3,6

10: 1,2,5,10

15: 1,3,5,15

21: 1,3,7,21

28: 1,2,4,7,14,28

We can see that 28 is the first triangle number to have over

five divisors. What is the value of the first triangle number

to have over five hundred divisors?